Leading chipmaker Nvidia has used the 2026 Consumer Electronics Show (CES) to launch Alpamayo, promising self-driving cars that can “think like a human,” and a new generation of chips with the Vera Rubin platform, reports the Guardian.

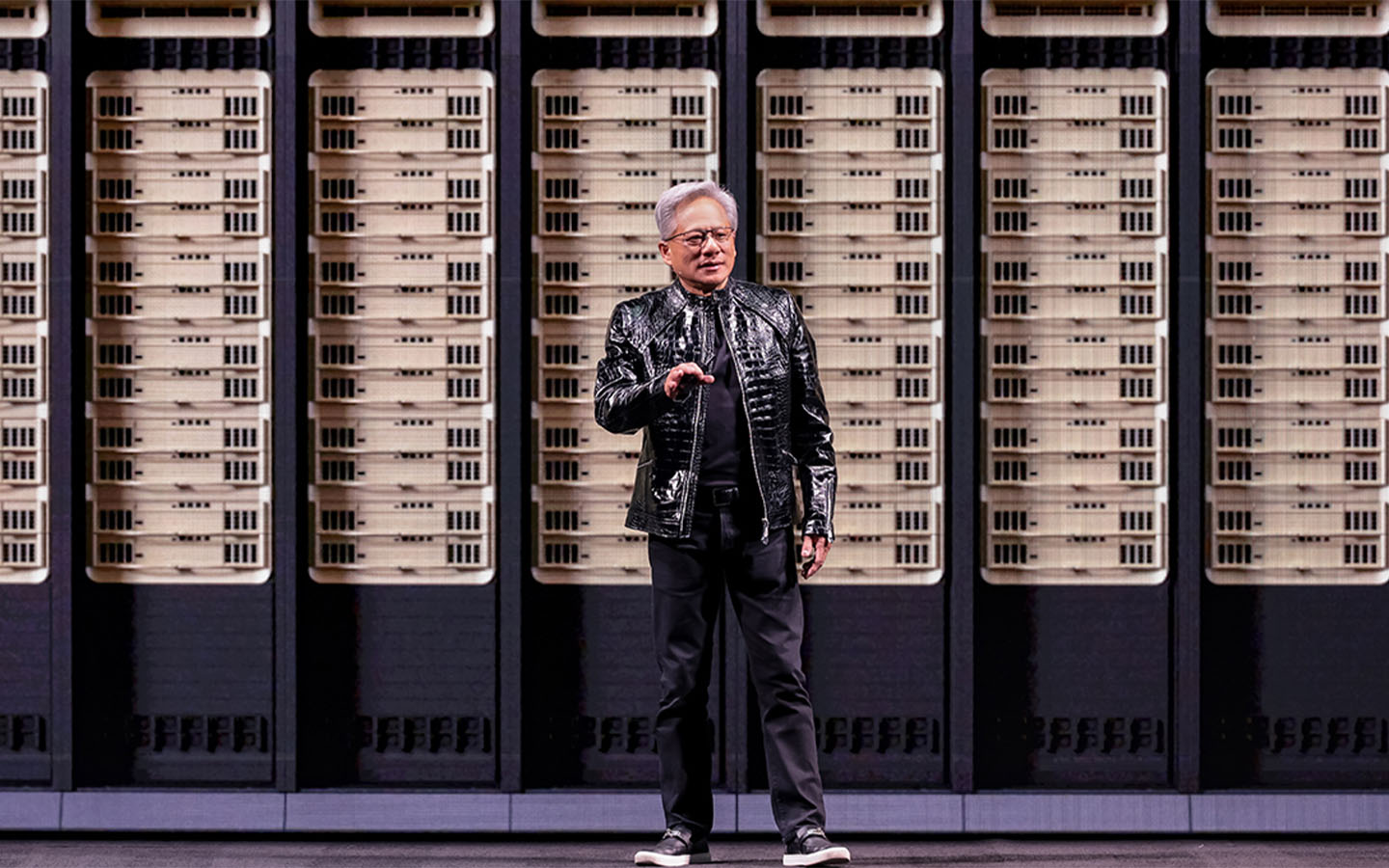

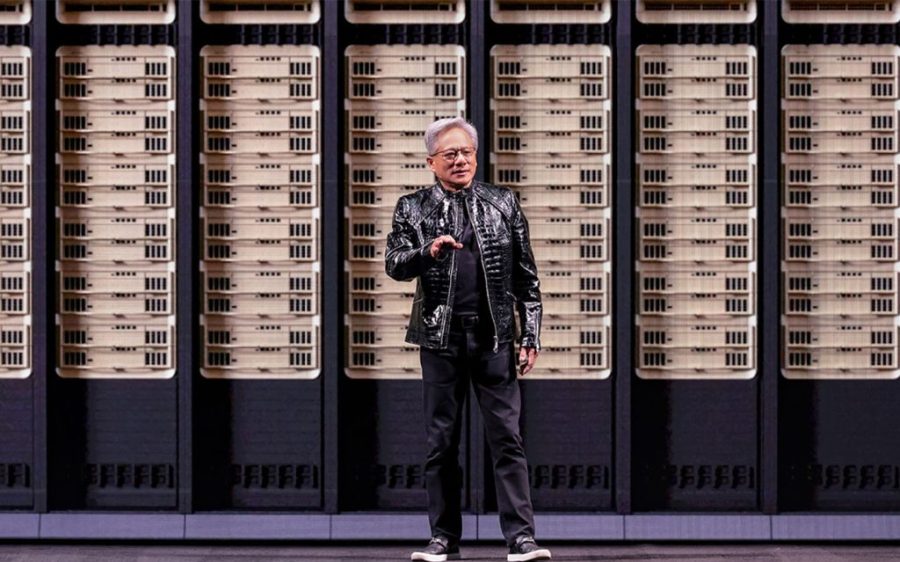

“The ChatGPT moment for physical AI is here, when machines begin to understand, reason and act in the real world,” Nvidia founder and CEO Jensen Huang told the crowd at this year’s show in Las Vegas.

The new Alpamayo platform is designed specifically to tackle the type of unusual or unpredictable driving scenarios that have long vexed the autonomous vehicle (AV) industry.

“Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decisions,” Huang proclaimed. “It’s the foundation for safe, scalable autonomy.”

As a fully open-source tool, combining the first open reasoning vision-language-action model for the AV community with simulation tools and datasets, Alpamayo offers “a cohesive, open ecosystem that any automotive developer or research team can build upon,” according to Nvidia.

[See more: The US will permit the export of Nvidia’s advanced AI chips to China]

Meanwhile, the Vera Rubin platform, integrating six new components designed to work in concert, aims to address the “skyrocketing” amount of computation necessary for AI.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” Huang said at CES.

By tightly integrating innovation across chips, trays, racks, networking, storage and software, the Rubin platform eliminates the bottlenecks and slashes the high cost of scaling AI. Compared to the previous Blackwell platform, Rubin delivers up to 10-times reduction in interference token cost and four-times reductions in the number of GPUs needed to train so-called “mixture of experts” (MoE) models.

Architecture using MoE models has become the new standard for building smarter AI, using specialised components similar to the way human brains use different regions based on a particular task.

With the AI buildup still hurtling forward at breakneck speed, dozens of the world’s leading AI labs, cloud service providers, computer makers and startups are slated to adopt Rubin. Nvidia Rubin is currently in full production, and Rubin-based products will be available from partners in the second half of 2026.